Growth ❤️ Experimentation

Growth ❤️ Experimentation

Growth ❤️ Experimentation

The hypothesis-led experimentation engine that powers the modern growth team

The hypothesis-led experimentation engine that powers the modern growth team

The hypothesis-led experimentation engine that powers the modern growth team

Galileo made conclusions about the speed of falling bodies, Lavoisier derived his theories of conservation of mass, and Louis Pasteur fleshed out the germ theory of diseases all on the shoulders of the father of empiricism: Francis Bacon. Francis Bacon pioneered the quest for repeatable observations through experiments and laid the groundwork for the scientific method as we know it today.

“The true method of experience first lights the candle (builds the hypothesis), and then by means of the candle shows the way”, writes Bacon in Novum Organum (New Instrument), “not bungling or erratic, and from it deducing axioms, and from established axioms again new experiments.”

The Baconian method soon evolved to become a scientific substitute for the way truths were established in societies. Bacon’s empirical method dictated a description, classification, and acceptance of connected facts and replaced a prevailing reliance on fiction, guesses, and the citing of curiously absent authorities. Bacon, for instance, argued that healthy people should be exposed to outside influences like the cold, rain, and other sick people - and that the outcome of these experiments must be tested for repeatability before the truth could be known.

Centuries have passed since Francis Bacon, and the last you heard of him was likely through this Reddit post, but the methods he championed have proved to be highly transferable.

Today, the core driver of growth for the fastest-growing businesses in the world is that spirit: that of experimentation.

🌟 “Scientific method applied to KPIs.”

Growth - A function that took birth in Facebook's hallowed Menlo Park office and later popularized by its success at Airbnb, Uber, Pinterest, and more, is now a staple at all your favorite technology companies. You might know us as people chasing answers for "How is our product growing?" or more specific ones like "How are we acquiring users?", "How can we retain them?", "How can we get them to pay us more?". Given that this is a growth newsletter, a lot of you are probably growth professionals yourself.

Brian Balfour, Founder of Reforge and ex - VP Growth at Hubspot roots the need for a dedicated growth team in the following market changes:

Distribution has become more competitive and expensive

The lifecycle of channels/tactics has accelerated

Increase in the accessibility of data

The blurring lines of product/Engineering Blog/marketing/sales

Connecting users to the underlying value in a product (aka Distribution) has become a cross-functional effort that rapidly (but scientifically) skips between channels and tactics, solving customer problems by combining data and channel expertise.

"Most product teams are built to create or improve the core value provided to customers. Growth is connecting more people to the existing value." - Casey Winters Chief Product Officer at Eventbrite and former Growth Lead at Pinterest/Grubhub

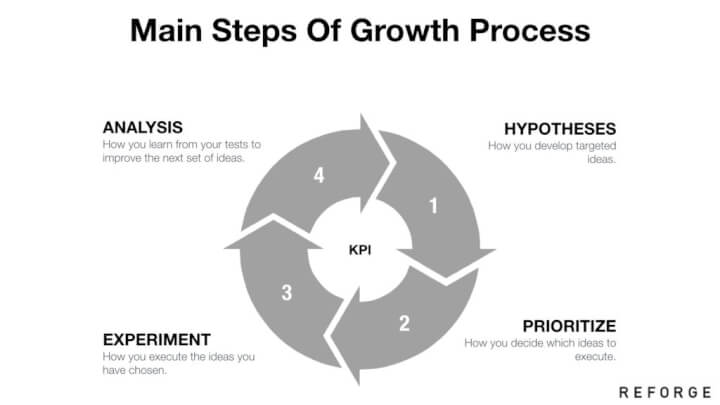

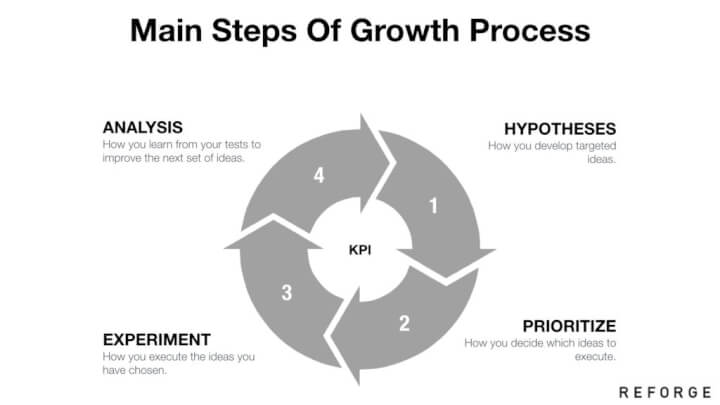

This new approach to distribution and driving product adoption needed a new skill set and a specialized team - The Growth Team, equipped with a specialized Growth Process - one of Hypothesis led experimentation.

"Scientific method applied to KPI's" is how Steven Dupree, ex - Logmein, and SoFi describes this new phenomena.

Growth teams,

analyze user insights and data insights to identify customer problems that are blocking major adoption themes like acquisition, engagement, and monetization

attach these problems to well-defined outcome metrics like daily active users (DAUs), or paid conversion rates (Monetization rates)

ideate on solutions - a combination of research, business context, and intuition

create hypotheses on how a certain solution can impact an outcome metric and by how much

and execute scientific tests to prove (/disprove) a hypothesis

Let's dig deeper into a day in the life of a Growth Experiment 🌞

🤳 But first, let me make a hypothesis

Hypothesis | pronounced: hʌɪˈpɒθɪsɪs/ | noun -

"a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation." also - the cornerstone of scientific experimentation

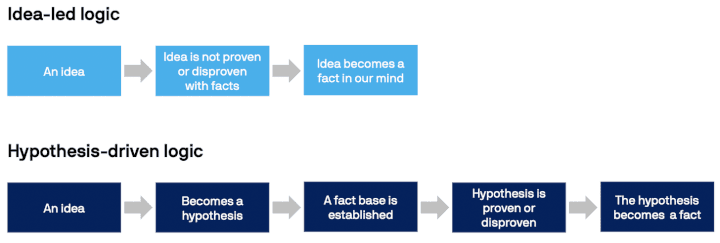

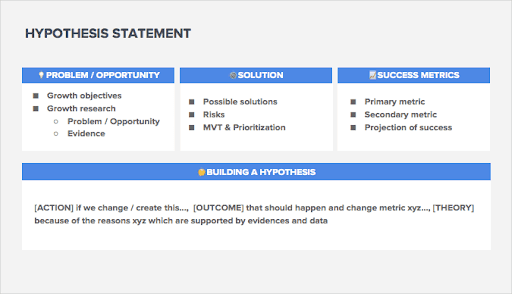

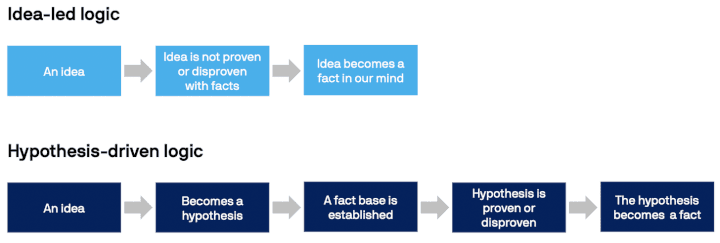

While ideas are plagued with biases, myths, and false facts, a hypothesis is clear, scientific, and testable.

When test results conclude that the outcome metric has remained the same/ increased/ decreased, a hypothesis stands validated/invalidated

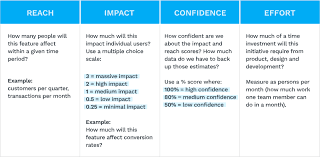

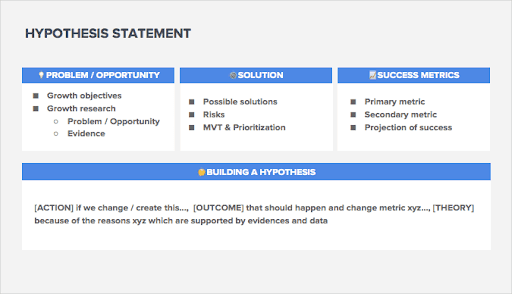

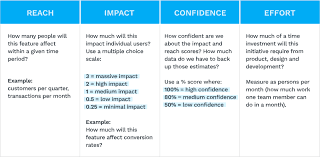

Prioritization: Growth teams create multiple hypotheses that can solve varied problems and these are converted into individual tests prioritized by reach 🟢, impact 🟢, confidence 🟢 and effort 🔴 and are accordingly added to the experimentation roadmap. These experimentation roadmaps are managed in workspaces for scheduling, executing, and reporting ease.

The chosen ones 🧙🏻♀️ enter the next phase - the testing stage! 👨🏻🔬

🪄 The potions and the spells

Our success at Amazon is a function of how many experiments we do per year, per month, per week, per day. - Jeff Bezos

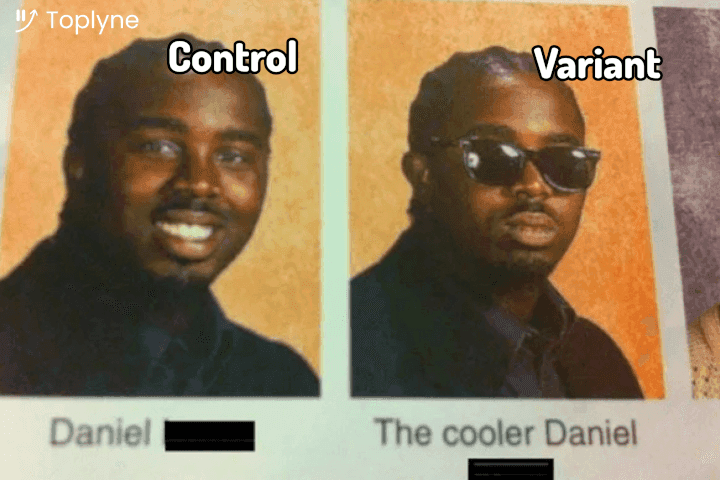

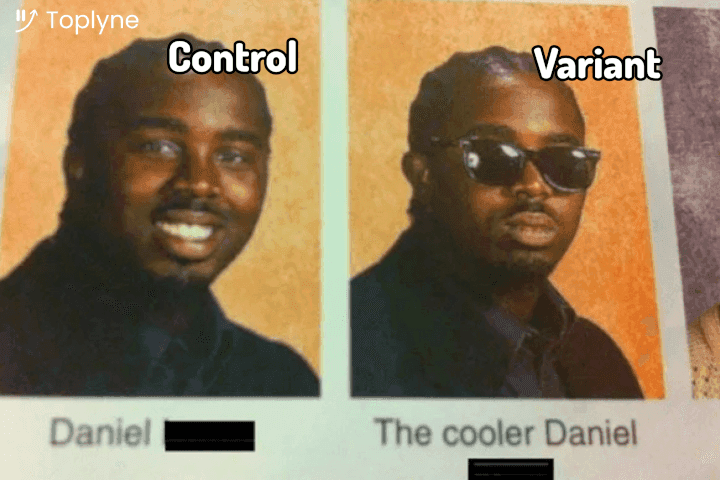

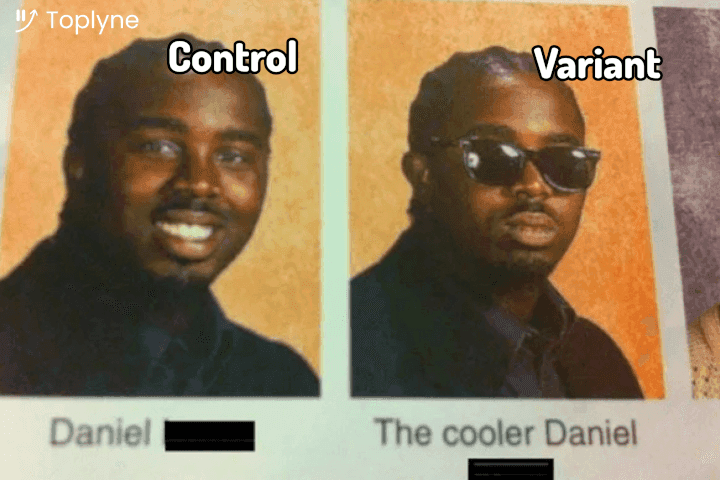

Growth experiment tests mimic software deployment processes like remote config and feature flags (we pinged our Engineering Blog team for this 🤭) that enable personalization and rolling releases. Control and variant versions (a modification, a new addition, omission from the normal, etc) are shown to a homogenous split of unsuspecting users and the hypothesis is validated by observing changes in the outcome metric between the two versions.

A test could be any of the following:

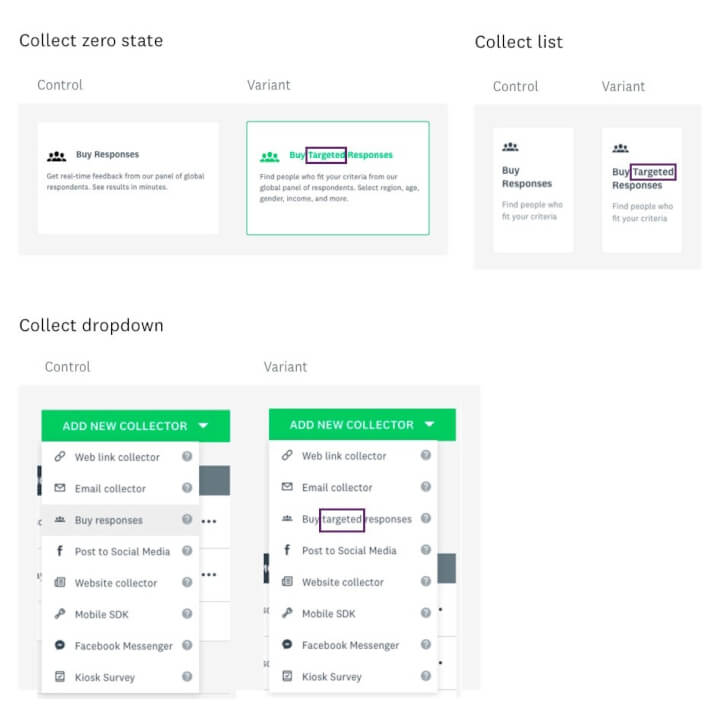

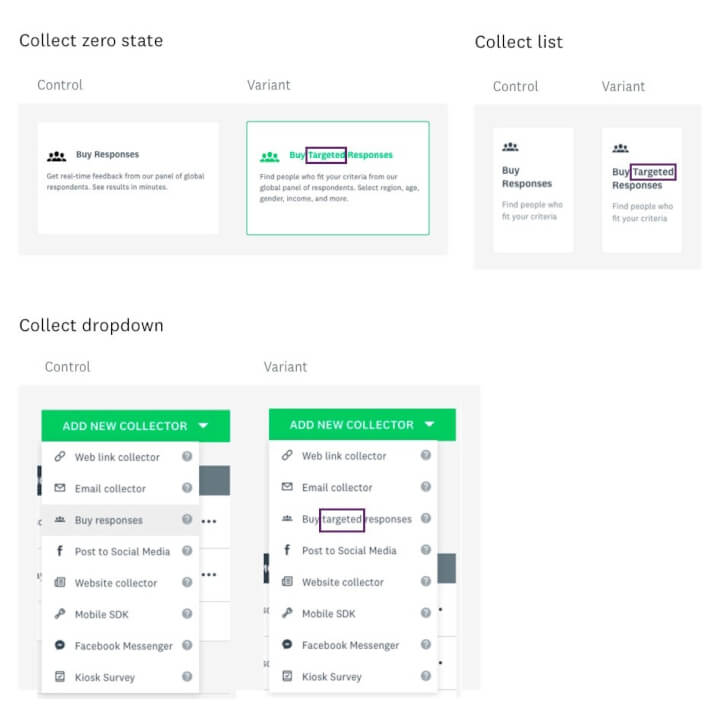

From Surveymonkey changing copy to increase adoption of their "Audience collector” feature

“We hypothesized that using more explicitly detailed copy to describe the product would enable users to more easily determine if it was the right product for them. We identified which triggers our users engaged with the most and used those to test our hypothesis.” - Christopher D Scott, Growth Engineer at Surveymonkey

to Uber's full-fledged Uber-XP experimentation platform that runs 1000 experiments at any point in time

The underlying recipe 👨🏻🍳, however, remains the same:

🛠 Identify the test method - Different experiments warrant different test methods. A/B tests (1 change), Multivariate tests (multiple changes), and Split URL testing (redirecting traffic to an entirely different page) are methods that can be used to test out different hypotheses.

👨🏻💻 Creating the control and the variant - Different versions are created either on the client-side (variants live and render on the user’s browser) or are built separately on the server-side. Config settings and feature flags ensure that the audience is split equally (or as prescribed) between the two versions.

📈 Metrics to be measured - Well-defined primary and secondary objective metrics and the necessary instrumentation to report them are set up.

The number of users who bought the “Audience collector feature” is the primary metric for Surveymonkey while the number of clicks on the “Buy responses” button can be a secondary metric. The test validated that adding “targetted” and changing the description communicated the product value better resulting in better conversion. 🙌🏻

👥 Segmentation and targeting - The audience for a test on certain occassions needs to be segmented basis basic user properties like - geography, device used, browser used, and sometimes with more complex product usage triggers.

User segmentation can also be used to restrict the test to a small sample of users enabling multiple parallel tests that are independent of each other.

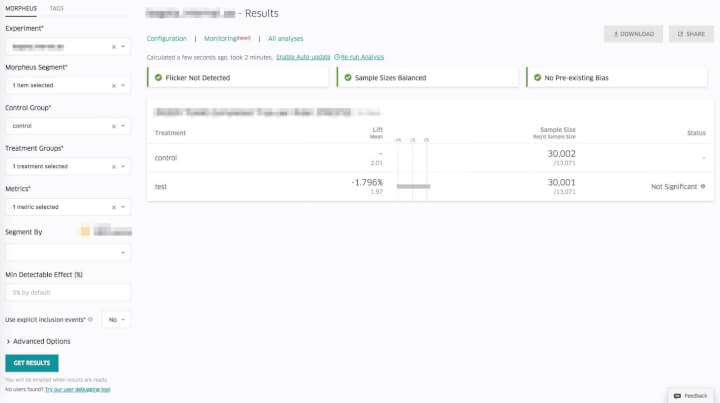

📊 Statistical significance - determining the minimum sample size required for accurate test results and for when a test can be considered complete. The statistical significance approach called the frequentist method( p-value & statistical power) or the Bayesian approach are used for the same.

🔍 Analysis and reporting - Dashboards, heatmaps, and other visualization tools for reading test results.

While Uber and Spotify have built out internal tools and dashboards for running these experiments, many plug-and-play solutions exist that allow Growth teams to execute and analyze tests with minimal setup time.

Last but not the least, let’s discuss who mans this engine… Growth hackers!? 🤨

😤 "No! We're not hackers"

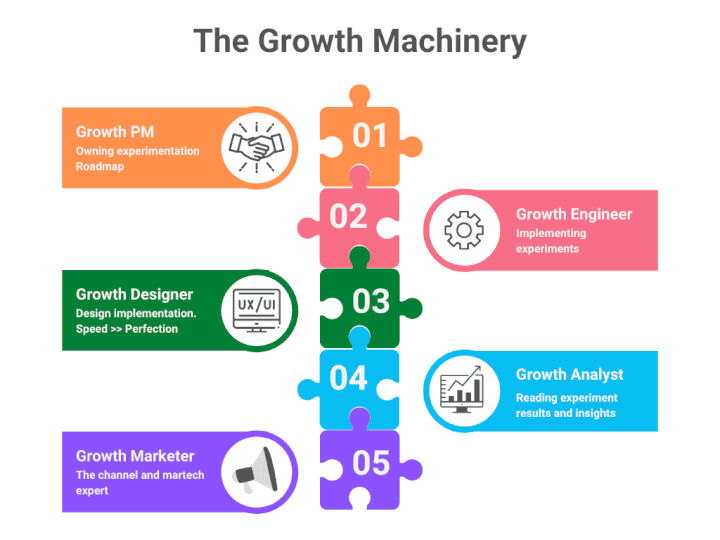

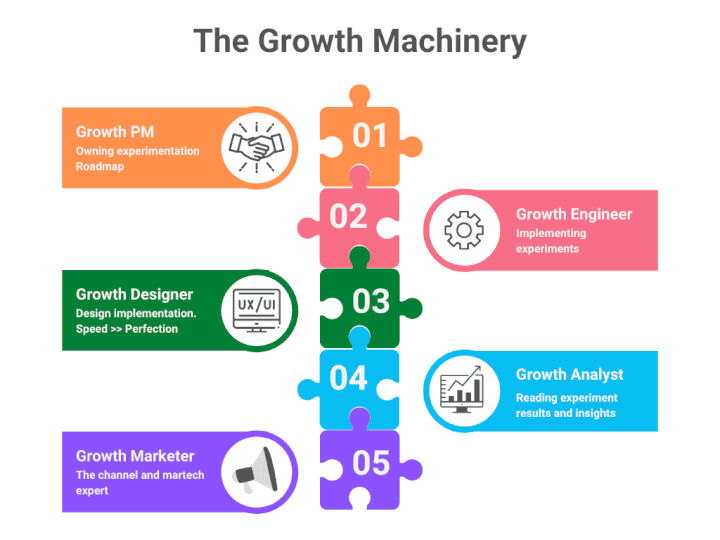

While our ancestry can be traced back to solo magicians who moved numbers with unconventional ‘hacks’, growth today is a team game. Organized in siloed pods or alternatively distributed across the product, design, Engineering Blog, and marketing verticals, growth teams are cross-functional machinery capable of managing, implementing, and analyzing an experimentation engine.

It’s very important to note that successful growth teams are backed by an organization-wide buy-in to the experimentation way of life and are provided the required resources and autonomy to succeed. 🤝

Galileo made conclusions about the speed of falling bodies, Lavoisier derived his theories of conservation of mass, and Louis Pasteur fleshed out the germ theory of diseases all on the shoulders of the father of empiricism: Francis Bacon. Francis Bacon pioneered the quest for repeatable observations through experiments and laid the groundwork for the scientific method as we know it today.

“The true method of experience first lights the candle (builds the hypothesis), and then by means of the candle shows the way”, writes Bacon in Novum Organum (New Instrument), “not bungling or erratic, and from it deducing axioms, and from established axioms again new experiments.”

The Baconian method soon evolved to become a scientific substitute for the way truths were established in societies. Bacon’s empirical method dictated a description, classification, and acceptance of connected facts and replaced a prevailing reliance on fiction, guesses, and the citing of curiously absent authorities. Bacon, for instance, argued that healthy people should be exposed to outside influences like the cold, rain, and other sick people - and that the outcome of these experiments must be tested for repeatability before the truth could be known.

Centuries have passed since Francis Bacon, and the last you heard of him was likely through this Reddit post, but the methods he championed have proved to be highly transferable.

Today, the core driver of growth for the fastest-growing businesses in the world is that spirit: that of experimentation.

🌟 “Scientific method applied to KPIs.”

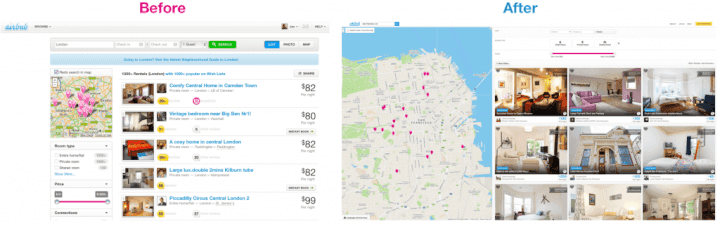

Growth - A function that took birth in Facebook's hallowed Menlo Park office and later popularized by its success at Airbnb, Uber, Pinterest, and more, is now a staple at all your favorite technology companies. You might know us as people chasing answers for "How is our product growing?" or more specific ones like "How are we acquiring users?", "How can we retain them?", "How can we get them to pay us more?". Given that this is a growth newsletter, a lot of you are probably growth professionals yourself.

Brian Balfour, Founder of Reforge and ex - VP Growth at Hubspot roots the need for a dedicated growth team in the following market changes:

Distribution has become more competitive and expensive

The lifecycle of channels/tactics has accelerated

Increase in the accessibility of data

The blurring lines of product/Engineering Blog/marketing/sales

Connecting users to the underlying value in a product (aka Distribution) has become a cross-functional effort that rapidly (but scientifically) skips between channels and tactics, solving customer problems by combining data and channel expertise.

"Most product teams are built to create or improve the core value provided to customers. Growth is connecting more people to the existing value." - Casey Winters Chief Product Officer at Eventbrite and former Growth Lead at Pinterest/Grubhub

This new approach to distribution and driving product adoption needed a new skill set and a specialized team - The Growth Team, equipped with a specialized Growth Process - one of Hypothesis led experimentation.

"Scientific method applied to KPI's" is how Steven Dupree, ex - Logmein, and SoFi describes this new phenomena.

Growth teams,

analyze user insights and data insights to identify customer problems that are blocking major adoption themes like acquisition, engagement, and monetization

attach these problems to well-defined outcome metrics like daily active users (DAUs), or paid conversion rates (Monetization rates)

ideate on solutions - a combination of research, business context, and intuition

create hypotheses on how a certain solution can impact an outcome metric and by how much

and execute scientific tests to prove (/disprove) a hypothesis

Let's dig deeper into a day in the life of a Growth Experiment 🌞

🤳 But first, let me make a hypothesis

Hypothesis | pronounced: hʌɪˈpɒθɪsɪs/ | noun -

"a supposition or proposed explanation made on the basis of limited evidence as a starting point for further investigation." also - the cornerstone of scientific experimentation

While ideas are plagued with biases, myths, and false facts, a hypothesis is clear, scientific, and testable.

When test results conclude that the outcome metric has remained the same/ increased/ decreased, a hypothesis stands validated/invalidated

Prioritization: Growth teams create multiple hypotheses that can solve varied problems and these are converted into individual tests prioritized by reach 🟢, impact 🟢, confidence 🟢 and effort 🔴 and are accordingly added to the experimentation roadmap. These experimentation roadmaps are managed in workspaces for scheduling, executing, and reporting ease.

The chosen ones 🧙🏻♀️ enter the next phase - the testing stage! 👨🏻🔬

🪄 The potions and the spells

Our success at Amazon is a function of how many experiments we do per year, per month, per week, per day. - Jeff Bezos

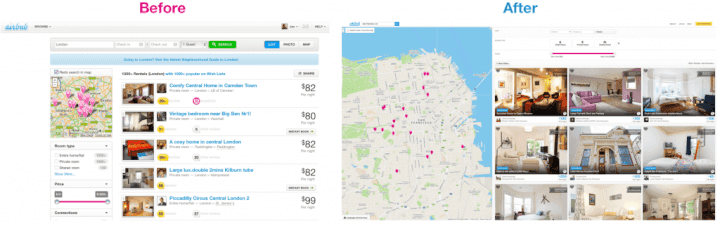

Growth experiment tests mimic software deployment processes like remote config and feature flags (we pinged our Engineering Blog team for this 🤭) that enable personalization and rolling releases. Control and variant versions (a modification, a new addition, omission from the normal, etc) are shown to a homogenous split of unsuspecting users and the hypothesis is validated by observing changes in the outcome metric between the two versions.

A test could be any of the following:

From Surveymonkey changing copy to increase adoption of their "Audience collector” feature

“We hypothesized that using more explicitly detailed copy to describe the product would enable users to more easily determine if it was the right product for them. We identified which triggers our users engaged with the most and used those to test our hypothesis.” - Christopher D Scott, Growth Engineer at Surveymonkey

to Uber's full-fledged Uber-XP experimentation platform that runs 1000 experiments at any point in time

The underlying recipe 👨🏻🍳, however, remains the same:

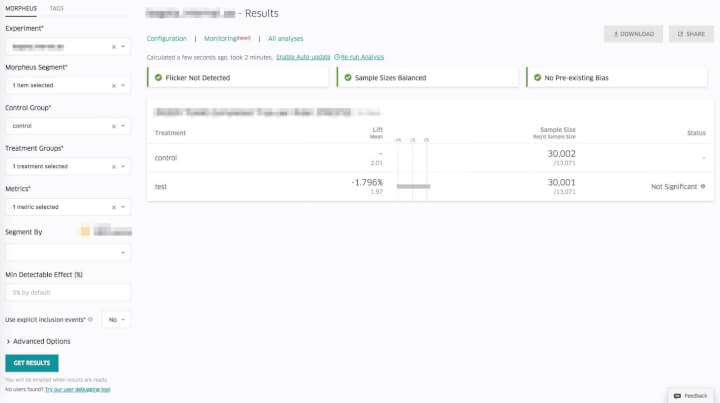

🛠 Identify the test method - Different experiments warrant different test methods. A/B tests (1 change), Multivariate tests (multiple changes), and Split URL testing (redirecting traffic to an entirely different page) are methods that can be used to test out different hypotheses.

👨🏻💻 Creating the control and the variant - Different versions are created either on the client-side (variants live and render on the user’s browser) or are built separately on the server-side. Config settings and feature flags ensure that the audience is split equally (or as prescribed) between the two versions.

📈 Metrics to be measured - Well-defined primary and secondary objective metrics and the necessary instrumentation to report them are set up.

The number of users who bought the “Audience collector feature” is the primary metric for Surveymonkey while the number of clicks on the “Buy responses” button can be a secondary metric. The test validated that adding “targetted” and changing the description communicated the product value better resulting in better conversion. 🙌🏻

👥 Segmentation and targeting - The audience for a test on certain occassions needs to be segmented basis basic user properties like - geography, device used, browser used, and sometimes with more complex product usage triggers.

User segmentation can also be used to restrict the test to a small sample of users enabling multiple parallel tests that are independent of each other.

📊 Statistical significance - determining the minimum sample size required for accurate test results and for when a test can be considered complete. The statistical significance approach called the frequentist method( p-value & statistical power) or the Bayesian approach are used for the same.

🔍 Analysis and reporting - Dashboards, heatmaps, and other visualization tools for reading test results.

While Uber and Spotify have built out internal tools and dashboards for running these experiments, many plug-and-play solutions exist that allow Growth teams to execute and analyze tests with minimal setup time.

Last but not the least, let’s discuss who mans this engine… Growth hackers!? 🤨

😤 "No! We're not hackers"

While our ancestry can be traced back to solo magicians who moved numbers with unconventional ‘hacks’, growth today is a team game. Organized in siloed pods or alternatively distributed across the product, design, Engineering Blog, and marketing verticals, growth teams are cross-functional machinery capable of managing, implementing, and analyzing an experimentation engine.

It’s very important to note that successful growth teams are backed by an organization-wide buy-in to the experimentation way of life and are provided the required resources and autonomy to succeed. 🤝

Related Articles

Behavioral Retargeting: A Game-Changer in the Cookieless Era

Unlock the power of behavioral retargeting for the cookieless future! Learn how it personalizes ads & boosts conversions. #behavioralretargeting

All of Toplyne's 40+ Badges in the G2 Spring Reports

Our customers awarded us 40+ badges in G2's Summer Report 2024.

Unlocking the Full Potential of Google PMax Campaigns: Mastering Audience Selection to Double Your ROAS

Copyright © Toplyne Labs PTE Ltd. 2024

Copyright © Toplyne Labs PTE Ltd. 2024

Copyright © Toplyne Labs PTE Ltd. 2024

Copyright © Toplyne Labs PTE Ltd. 2024